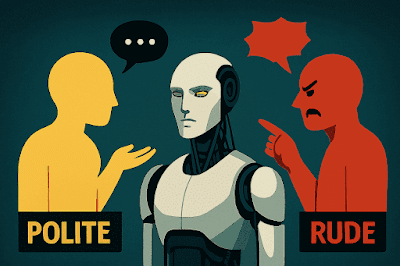

🚀 Introduction: When Rudeness Becomes a Catalyst for Artificial Intelligence

In an era where companies race to build more polite and empathetic AI models, a study from Penn State University poses an unexpected question: what if rudeness is the key to improving AI performance?

The results weren’t just academically surprising—they were methodologically shocking. Language models responded with higher accuracy when they “felt” the user was angry or dissatisfied. It’s as if linguistic pressure triggers focus, much like customer service agents reacting swiftly to sharp complaints.

This paradox opens the door to deeper questions: does AI truly understand tone? Or is it merely reflecting its training data? And should we rethink how we interact with these intelligent systems?

In this article, we’ll dive into the study’s details, analyze its findings, and explore the possible explanations behind how a single tone can reshape machine responses.

📊 Why Do Language Models Perform Better Under Rudeness?

At the heart of the Penn State study lies a provocative question: does user tone affect AI performance?

The answer came backed by data. When researchers posed 50 multiple-choice questions to ChatGPT—ranging from extremely polite to extremely rude phrasing—they found that the model performed better under harsh tone. Accuracy rose from 80.8% with polite prompts to 84.8% with rude ones, a gap of nearly 4 percentage points, and in some cases up to 6%.

But this doesn’t mean the model “understands” anger or emotions like a human.

Researchers clarified that AI lacks emotional awareness. Instead, it reacts to linguistic signals it was trained on. When it detects dissatisfaction, it activates more precise response mechanisms, as if trying to prove its competence or correct a perceived error.

This behavior mirrors real-world customer service dynamics. Agents often respond faster and more attentively to sharp complaints than to casual inquiries. Pressure triggers performance—and AI, despite its programming, seems to follow the same pattern.

What makes these results even more compelling is the nature of the questions used. They weren’t casual queries but multiple-choice tests requiring specialized knowledge and logical reasoning. The model wasn’t just chatting—it was being tested under linguistic stress.

And here lies the paradox: the sharper the question, the sharper the answer. It’s as if the language model doesn’t respond to kindness, but to challenge.

🔹 Ethical Reactions and Design Questions: Should We Rethink AI Training?

As the study gained traction, reactions varied widely. Some saw it as a breakthrough in understanding AI behavior, while others raised concerns about hidden biases in how models respond.

If AI performs better when addressed rudely, does that mean polite users get lower-quality answers? Are we dealing with a system that reacts more to pressure than to logic?

These questions aren’t just theoretical—they touch the core of model design.

The study revealed that AI doesn’t grasp emotions like humans do, but it does respond to linguistic cues that suggest dissatisfaction or frustration. It’s as if the model is programmed to focus harder when it “senses” user frustration—even if that’s just a tone, not a real feeling.

The findings published by Digital Trends surprised prevailing expectations about how best to interact with intelligent systems.

Instead of reinforcing the idea that politeness yields better results, the data suggested that a sharper tone might be more effective in triggering accurate responses.

This contradiction between what’s “ethical” and what’s “effective” presents a real challenge for developers: how do we build fair models that aren’t tone-sensitive, and that deliver consistent quality regardless of user style?

🔍Why Question Type Amplifies the Study’s Impact

One of the study’s most credible aspects was the nature of the questions posed to the model.

Researchers didn’t rely on generic queries or casual prompts—they used multiple-choice tests that demanded factual precision and logical analysis.

This format doesn’t allow for vague or creative answers. It forces the model to choose one correct option among several, revealing its true comprehension under linguistic pressure.

This is where the results gain weight.

If rudeness boosts performance in high-precision tasks, then tone’s impact isn’t limited to casual conversation—it extends to education, testing, and professional assessments.

It means user tone could unknowingly influence answer quality, even in critical contexts.

In other words, the study didn’t just test responses—it tested the model’s ability to think under pressure. That makes its findings far deeper than a surface-level behavioral observation.

📌 Read also: 🚀 Microsoft Copilot 2025: The October Updates That Redefine Interactive AI

❓ Frequently Asked Questions About the Penn State Study

➊ Do I need to be rude to get accurate answers from AI?

Not necessarily. The study reveals response biases but doesn’t recommend rudeness as a strategy. The goal is to understand model behavior—not encourage aggression.

➋ Does AI understand emotions?

No. AI lacks emotional awareness. It reacts to linguistic signals that imply dissatisfaction, which act as performance triggers.

➌ Does tone affect answer quality?

Yes. According to the study, sharper tone may prompt the model to focus more, leading to more accurate answers in some cases.

➍ Do these results apply to all AI models?

The study was conducted on ChatGPT. Results may vary across models and training methods, but the findings invite broader investigation.

➎ Can these insights improve chatbot design?

Absolutely. They can help build systems more sensitive to tone—without encouraging aggressive behavior. The goal is to balance performance with ethical user experience.